Before deciding how you will conduct your evaluation, it is important to be clear about what you want the evaluation to accomplish. This section will help you clarify your evaluation goals, refine questions to guide the evaluation, and prompt you to think about what kind of evidence to look for to answer your evaluation questions (i.e., indicators). Although the process from goals to indicators is described linearly here, you are likely to find that it is not so. Brainstorming evaluation questions may lead you to re-examine evaluation goals, and identifying indicators may lead you back to refining evaluation questions.

What are your goals for the evaluation?

You probably already have ideas about what you want the evaluation to accomplish. Taking the time to refine these ideas will help you focus your efforts and choose appropriate evaluation methods.

Consider these questions to refine your evaluation goals:

- Why are you planning the evaluation? Is it for accountability, to document the program's results to an organization or funder? Is it to learn if the program is on the right track, to assess the program’s accomplishments, to improve the program, or something else?

- Who will use the evaluation’s results? Program managers? Staff? Current or potential funders? Government agencies? Teachers? School administrators? What kind of data will you need to collect to meet the needs of these different stakeholders? What information will they find most credible and easy to understand?

The answers to these questions should influence the methods you use to carry out your evaluation. If you decide, for example, that your goal is to generate evidence of your program’s success, you may want to focus on an outcome you are confident the program is achieving and select methods that will allow you to generalize results to all program participants. Alternatively, if your goal is to improve specific aspects of the program, you may want to focus on these by obtaining in-depth recommendations from participants and staff.

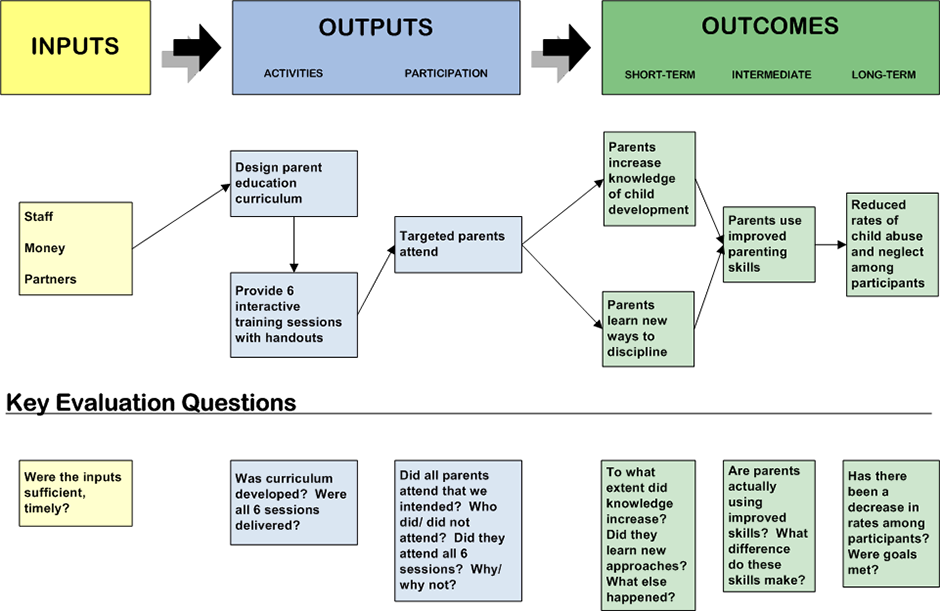

How do I develop evaluation questions?

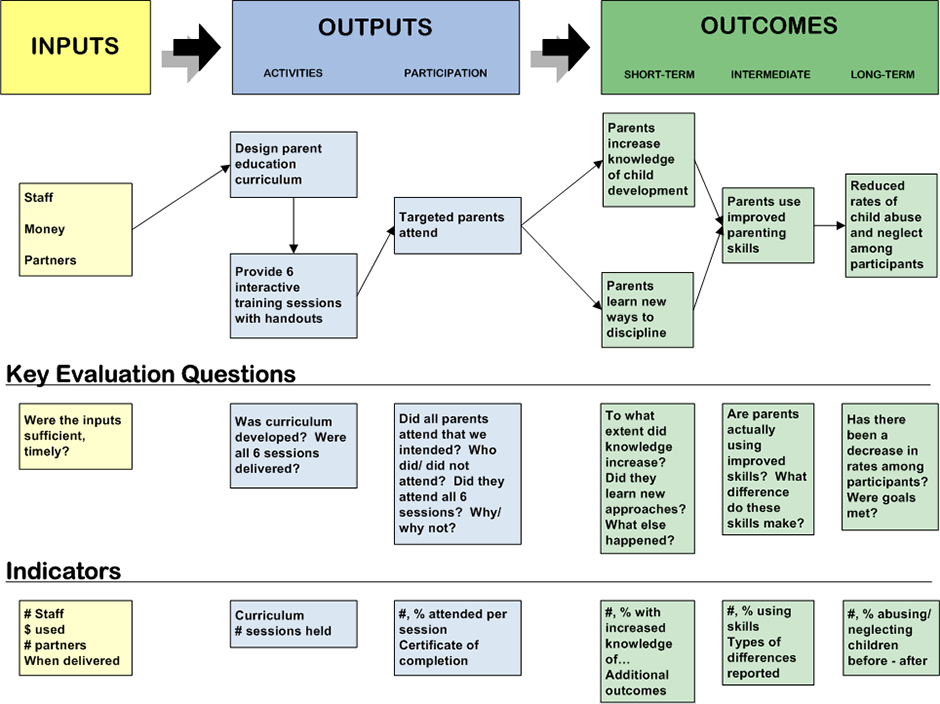

After deciding on your evaluation goals, you are ready to identify questions for the evaluation to answer. If you have already created a logic model (Step 2), the process of developing evaluation questions should be easy. Evaluation questions may ask about a single element in a logic model (e.g., how well is an output being implemented), or the questions may focus on relationships between two or more elements of a program’s logic model (e.g., to what extent does a particular output result in a desired outcome). The figure below shows the types of questions that can be derived from a logic model.

From Taylor-Powell, E., Jones, L. " Henert, E. (2002).

After you have identified potential evaluation questions, it is well worth spending time to refine and prioritize these questions. Asking inappropriate questions, asking too many questions, or asking questions with ambiguous wording will not help you meet your evaluation goals. So, what are good evaluation questions and how do you prioritize them?

Good evaluation questions fit with your intended purpose for conducting the evaluation.

Evaluations that seek to improve a program may ask questions about how well the program is being implemented, whether it is delivering its intended outputs, and to what extent the program is achieving short and intermediate-term outcomes. Evaluations that seek to demonstrate whether a program worked as intended typically focus on outcomes and impacts.

Good evaluation questions are framed in ways that will yield more than yes or no answers.

Consider the following examples:

- To what extent were the planned activities completed? Why were they or weren't they completed?

- To what extent are we achieving our outcomes? How close are we to achieving them? Why did or didn't we achieve them?

- How well are we managing our program? What additional staff and resources are needed to meet our objectives? How can we ensure that the program is replicable, cost-effective, and/or sustainable?

Good evaluation questions consider the concerns of multiple stakeholders.

Funders, policymakers, and program staff are likely to have different questions about your EE program. Policymakers and funders, for example, may be most concerned about the program’s long-term impacts on the community or environment, whereas program staff may be more interested in improving program delivery. Working with stakeholders to accommodate their concerns in your questions can improve buy-in and acceptance of evaluation findings down the road. Just keep in mind that no evaluation will succeed in being “all things to all people.” Some questions will have to be addressed in future evaluations. The following paragraphs offer advice for prioritizing questions according to your evaluation goals and available resources.

How do I prioritize evaluation questions?

You will likely generate many potential evaluation questions – far more than you will have the time and funding to pursue. To prioritize and narrow down your list, consider not only the resources you have available (e.g., time, money, staff, etc.), but also how much usable information each question might generate. Which questions will yield the most practical information for the cost? Will the results be easily understood and credible to stakeholders? How likely is it that the information from a question will influence decision-making or lead to improvements to your EE program?

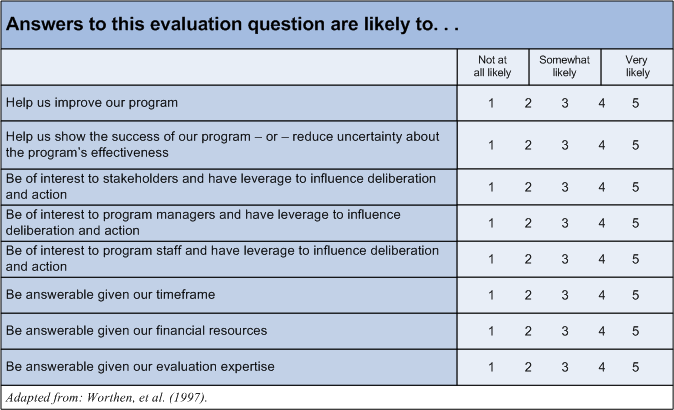

If you have difficulty reaching consensus with stakeholders and program staff about the priority of questions, you might use a table such as the one below. The process of ranking each question’s feasibility and importance may help build agreement on the evaluation’s focus as well as support and buy-in among stakeholders.

The following resources provide more details on developing and prioritizing evaluation questions:

- Evaluation Handbook

W.K. Kellogg Foundation.

Beginner

This handbook contains a section on developing evaluation questions (pp. 5 1-54) that provides examples of relevant evaluations questions, how to improve them, and narrow down the list of evaluation questions, along with guidance on who to involve in the process. - Evaluating EE in Schools: A Practical Guide for Teachers (.pdf)

Bennett, D.B. (1984). UNESCO-UNEP.

Beginner Intermediate

Chapter 1, What Should I Evaluate?, discusses how to decide what aspects of student learning to evaluate, and what aspects of the program to evaluate. - Measuring Progress: An Evaluation Guide for Ecosystem and Community-Based Projects (.pdf)

Ecosystem Management Initiative, University of Michigan (2004).

Intermediate

This detailed guide explains how to develop and prioritize evaluation questions as part of "Stage B, Developing an Assessment Framework: How Will You Know You Are Making Progress?". This particular section provides tips on brainstorming evaluation questions, lists of sample evaluation questions, worksheets, and guidance on which questions may be most important to evaluate given your circumstances.

How do I answer my evaluation questions?

Now that you have your evaluation questions, you need to determine how best to answer them. “Indicators” will help you to do so.

What are Indicators?

Indicators are measures that demonstrate whether a goal has been achieved. We rely on indicators in everyday life. For example, if you have a goal to lose weight and improve your overall health, you may measure success using indicators such as the number of pounds lost, a change in your body mass index, lowered cholesterol, or even an increase in your perceived energy level.

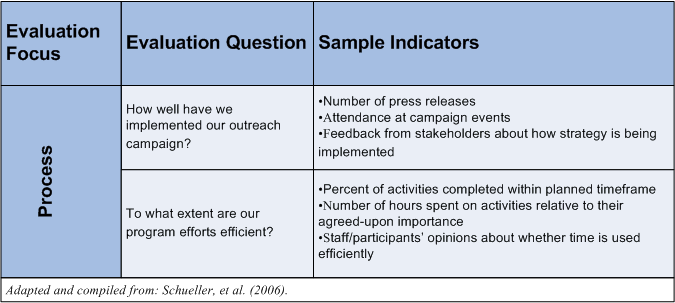

In an evaluation context, indicators provide the information needed to answer the evaluation questions. The figure below shows the types of indicators that can be used to answer questions aimed at different logic model components.

From Taylor-Powell, E., Jones, L. " Henert, E. (2002).

As the examples above suggest, it is often a good idea to use multiple indicators for each evaluation question. Relying on just one indicator can distort your interpretation of how well a program is working. For example, a workshop designed to foster recycling behavior may measure this outcome using one indicator: total weight of recycled materials in a particular community. While this indicator may be easy to measure (by calling local recycling facilities), it does not tell you the number of households participating, the amount of materials recycled per household, or the specific materials being recycled. Choosing additional indicators or indicators that provide a wider array of information will provide a more complete and accurate picture of the benefits of your EE program.

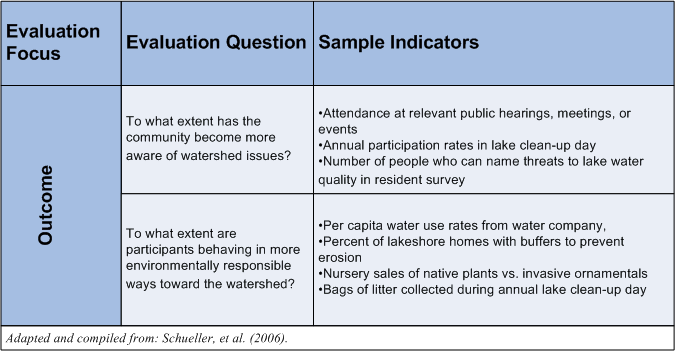

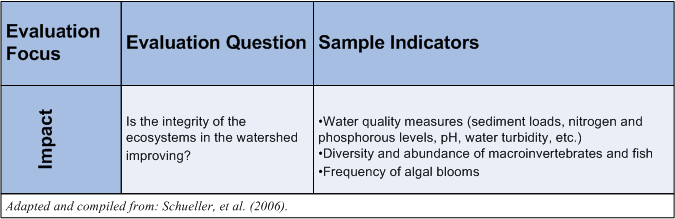

The figures below illustrates how multiple indicators can be used to answer questions about a community-based watershed education program. Questions and indicators are organized according to the potential focus of the evaluation – process or implementation issues, outcomes, and impacts. (For more on this topic, please see the Outcomes and Impacts page.) Note that it is unlikely that a single evaluation would try to address all of these. Also note that only a few sample indicators are offered for each question.

Process:

Process questions are concerned with how well the program is being delivered. Indicators may measure the number of outputs generated, participant and partner satisfaction with these outputs, or other aspects of program implementation.

Outcomes:

Outcome focused questions look for evidence that the program is benefiting participants, visitors, etc. The questions and indicators below look for evidence of change in individuals’ awareness and behaviors over time.

Impacts:

Impacts are the broader, long-term changes that your program has on the community or the environment. The questions and indicators below look for evidence that environmental quality has improved over time.

For another table that identifies indicators for specific evaluation questions, in this case about a healthcare program, refer to page 48 in Chapter 4 of the W.K. Kellogg Foundation Logic Model Development Guide (.pdf).

What are good Indicators?

Indicators are most useful when they are:

- Relevant and useful to decision-making (e.g., will stakeholders care about this measure?)

- Representative of what you want to find out (e.g., does the indicator capture what you need to know about your program?)

- Easy to interpret

- Sensitive to change

- Feasible and cost-effective to obtain

- Easily communicated to a target audience

Adapted from Ecosystem Management Initiative (2006)

Indicators should also have specific targets. Targets make explicit the standard of success for your program. For example, if you want to assess whether your program increased student knowledge of water pollution, your indicator could specify “at least 80% of students will correctly identify three common sources of water pollution.” This type of targeted indicator provides a more meaningful standard of success than one without such a target. If less than 80% of students are able to name three pollution sources, you will want to make changes to the program or reconsider your target.

If your program already has well-specified objectives, you may be able to extract targeted indicators from these objectives. Consider the following two alternative objectives for a watershed education program:

"As a result of the watershed outreach campaign...

- Option 1: ...the public will be more committed to protecting the watershed.” This objective is not ideal from a measurement perspective: i.e., the indicator is not explicit. Which public? What does it mean to be "committed to protecting the watershed?" How much "more" commitment will there be?

- Option 2: ...adult participation in lake clean-up day activities will increase by 50%." Note how this option offers an indicator for measuring "commitment to protecting the watershed;" i.e., participation in lake clean-up day activities. In addition, it includes a "target;" i.e., the expected increase. You can show that you have met this objective if there is at least a 50% increase in participation compared to past, baseline level of participation.

To learn more about indicators, consult the following resources:

- Evaluation Sourcebook (.pdf)

Unique Resource

One resource that provides a wealth of relevant information for all user-levels is the Evaluation Sourcebook (.pdf). For a variety of ecological, social, and organizational objectives, this resource identifies evaluation questions, indicators, data sources, and describes “real world” examples. Pages that environmental educators may find particularly useful include:- p. 74 – community participation and engagement

- p. 82 - environmentally responsible stewardship behaviors

- p. 144 - environmental knowledge, awareness, and concern

- p. 198 - education and outreach campaigns.

- Selecting Performance Indicators (.pdf)

USAID Center for Development Information and Evaluation, 1996.

Beginner Intermediate

This resource from USAID defines indicators, explains why they are important, describes the steps involved in selecting performance indicators, and presents seven criteria for assessing indicators. - W.K. Kellogg Foundation Logic Model Development Guide (.pdf)

W.K. Kellogg Foundation (2004).

Beginner Intermediate

Chapter 4, "Using Your Logic Model to Plan for Evaluation," offers practical advice on developing indicators, including a list of sample indicators, a checklist to assess their quality, and a worksheet to help match questions with indicators. - Indicators and Information Systems for Sustainable Development (.pdf)

The Sustainability Institute (1998). Beginner Intermediate

This document discusses indicators of sustainable development. Chapter 4 discusses the challenges of identifying good indicators, Chapter 7 provides a variety of sample indicators, and, Chapter 8 addresses what to do with indicators once they are in hand. - Indicators for Education for Sustainable Development: a report on perspectives, challenges and progress (.pdf)

Reid et al. Centre for Research in Education and the Environment, University of Bath (2006).

Intermediate

This document discusses indicators of Education for Sustainable Development (ESD). It includes discussion of indicators in general, their specific application to ESD, applications in policy making, and perspectives on current ESD indicator projects taking place in Europe.

References

Human Rights Resource Center, University of Minnesota. (2000). The Human Rights Education Handbook: Effective Practices for Learning, Action, and Change. Downloaded August 13, 2006, from: www1.umn.edu/humanrts/edumat/hreduseries/hrhandbook/toc.html

Schueller, S.K., S.L. Yaffee, S.J. Higgs, K. Mogelgaard, and E.A. DeMatia. (2006). Evaluation Sourcebook: Measures of Progress for Ecosystems and Community-Based Projects. Ecosystem Management Initiative, University of Michigan, Ann Arbor, MI. Downloaded August 13, 2006 from: www.snre.umich.edu/ecomgt/evaluation/EMI_SOURCEBOOK_August_2006.pdf

Taylor-Powell, E., Jones, L., & Henert, E. (2002). “Section 7: Using Logic Models in Evaluation: Indicators and Measures,” in Enhancing Program Performance with Logic Models. Retrieved July 30, 2007, from the University of Wisconsin-Extension web site: http://www1.uwex.edu/ces/lmcourse/

Worthen, B.R., Sanders, J.R., & Fitzpatrick, J.L. (1997). Program Evaluation: Alternative approaches and practical guidelines. New York: Longman Publishers, USA.